The FAA is in the process of redesigning the Class B airspace around SFO airport, and it signals an interesting shift in air navigation: the requirement that everyone in the airspace be able to navigate by means of GPS.

They are undertaking the redesign primarily to make flying around SFO quieter and more fuel efficient. The new shape will allow steeper descents at or near “flight idle” — meaning the planes can just sort of glide in, burning less gas and making less noise. As a side benefit, they will be able to raise the bottom of the airspace in certain places so that it is easier for aircraft not going to SFO to operate underneath.

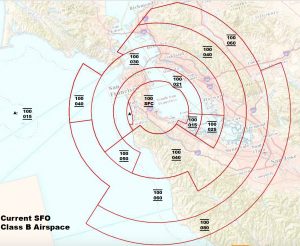

As far as I’m concerned, that’s all good, but I noticed something interesting about the new and old design. Here’s the old design:

This picture, or one like it, will be familiar to most pilots. It’s a bunch of concentric circles with lines radiating out from it, dividing it into sectored rings. The numbers represent the top and bottom of those sections, in hundreds of feet. This is the classic “inverted wedding cake” of a Class B airspace. In 3D, it looks something like this, but more complicated.

This design was based around the VOR, a radio navigation system, that could tell you what azimuth (radial) you are relative to a fixed station, such as the VOR transmitter on the field at SFO. A second system, usually coupled with a VOR, called DME, allows you to know your distance from the station. Together, you can know your exact position, but because of this “polar coordinate” way of knowing your position, designs intended to be flown by VOR+DME tend to be made of slices and sectors of circles.

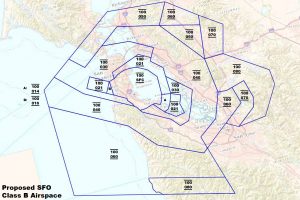

The new proposed design does away with this entirely.

Basically, they just drew lines any which way, wherever it made sense. This map is almost un-navigable by VOR and DME. It takes a lot of knob twisting and fiddling to establish your exact position if it is not based on an arc or radial. Basically, this map is intended for aircraft with GPS.

All of this is well and good, I guess. GPS has been ubiquitous in every phone, every iPad and every pilot’s flight bag for a long time.

I learned to fly in a transitional era, when GPS existed, but the aircraft mostly had 2 VOR receivers and a DME. My flight instructor would never have let me use a GPS as a mean of primary navigation. Sure, for help, but I needed to be able to steer the plane without it, because the only “legal” navigation system in the plane were the VORs. I still feel a bit guilty when I just punch up “direct to” in my GPS and follow the purple line. It feels like cheating.

But it’s not, I guess. Time marches on. Today, new aircraft all have built-in GPS, but a lot of older ones don’t. And if they’re going to fly under the SFO Class B airspace, they’re going to need to use one of those iPads to know where they are relative to those airspace boundaries. And strictly, speaking, they probably should get panel-mounted GPS as well.