I received an email today telling me that Detrumpify had been removed from the Mozilla Add-Ons store because it violated their Terms of Service. There was not, IMHO, nearly enough information in the message to determine how it supposedly violated them, but they claim it threatens protected groups. (would-be oligarchs?)

Detrumpify for Firefox went up in June of 2016, making it nearly 9 years old. In all that time it never had a complaint of abuse. Last week, I released a version for Firefox on Android. Perhaps that is what precipitated the renewed attention from thin-skinned Trump fans.

Regardless, I’ll do what I can to challenge this, but it may be that Detrumpify for FF will be permanently gone. If so, I would not be surprised to see it removed from the Chome Web Store as well.

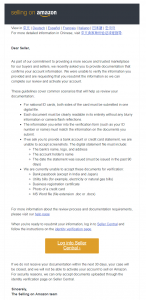

Mozilla’s entire message:

Hello,

Your Extension Detrumpify was manually reviewed by the Mozilla Add-ons team based on a report we received from a third party.

Our review found that your content violates the following Mozilla policy or policies:

– Acceptable Use, specifically Hateful content: This content does not comply with Mozilla’s prohibition of content that degrades, intimidates, incites violence against, or encourages prejudice against members of a protected group. (See <https://www.mozilla.org/about/legal/acceptable-use/>.)

Based on that finding, your Extension has been permanently disabled on https://addons.mozilla.org/en-US/developers/addon/709999/versions and is no longer available for download from Mozilla Add-ons, anywhere in the world. Users who have previously installed your add-on will be able to continue using it.

More information about Mozilla’s add-on policies can be found at https://extensionworkshop.com/documentation/publish/add-on-policies/.

If you believe that you did not violate Mozilla’s policies, or that this decision was otherwise made in error, you have the right to appeal this decision within 6 months from the date of this email. See <<redacted link>> for details on the appeal process, including how to file an appeal for this specific decision. You may also choose to have this decision reviewed by a third party neutral arbiter, or to seek judicial redress in a court of law.Thank you for your attention.

[ref:<<redacted_jd>>]

—

Mozilla Add-ons Team

https://addons.mozilla.org