It looks like Intel is joining the bandwagon of people that want to take away the analog 3.5mm “headphone jack” and replace it with USB-C. This is on the heels of Apple announcing that this is definitely happening, whether you like it or not.

There are a lot of good rants out there already, so I don’t think I can really add much, but I just want to say that this does sadden me. It’s not about analog v. digital per se, but about simple v. complex and open v. closed.

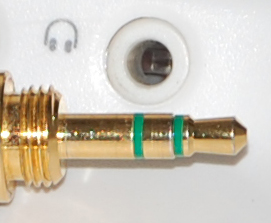

The headphone jack is a model of simplicity. Two signals and a ground. You can hack it. You can use it for other purposes besides audio. You can get a “guzinta” or “guzoutta” adapter to match pretty much anything in the universe old or new — and if you can’t get it, you can make it. Also, it sounds Just Fine.

Now, I’m not just being ant-change. Before the 1/8″ stereo jack, we had the 1/4″ stereo jack. And before that we had mono jacks, and before that, strings and cans. And all those changes have been good. And maybe this change will be good, too.

But this transition will cost us something. For one, it just won’t work as well. USB is actually a fiendishly complex specification, and you can bet there will be bugs. Prepare for hangs, hiccups, and snits. And of course, none of the traditional problems with headphones are eliminated: loose connectors, dodgy wires, etc. On top of this, there will be, sure as the sun rises, digital rights management, and multiple attempts to control how and when you listen to music. Prepare to find headphones that only work with certain brands of players and vice versa. (Apple already requires all manufacturers of devices that want to interface digitally with the iThings to buy and use a special encryption chip from Apple — under license, natch.)

And for nerd/makers, who just want to connect their hoozyjigger to their whatsamaducky, well, it could be the end of the line entirely. For the time being, while everyone has analog headphones, there will be people selling USB-C audio converter thingies — a clunky, additional lump between devices. But as “all digital” headphones become more ubiquitous, those adapters will likely disappear, too.

Of course, we’ll always be able to crack open a pair of cheap headphones and steal the signal from the speakers themselves … until the neural interfaces arrive, that is.

EDIT: 4/28 8:41pm: Actually, the USB-C spec does allow analog on some of the pins as a “side-band” signal. Not sure how much uptake we’ll see of that particular mode.