Category: Uncategorized

Marriage proposal from Jezebel

The fine folks at Jezebel want to marry me! Though I am married in Real Life, I see no reason that should preclude an Internet-based group arrangement.

Because this is clearly the beginning and end of my fifteen minutes, I will paste a few comments from the post:

- This is basically a marriage proposal to us as a group, right? We accept so hard.

- This is the best thing that has ever happened in the known universe, space, and time. Ever.

- I am not going to get any work done for the rest of the day…

- this is making me positively giddy

- Firmly believing that the entire Gawker Media empire was brought into existence specifically so this moment could happen. This is fantastic. BRING ON THE AMBITIOUS CORNDOGS, Y’ALL.

- Whoever made this is a goddamn genius.

- You are doing a wonderful service for your country! Love love love this.

- Somebody please tweet this to Colbert? He’s been doing incredible take-downs of Trump and I’m sure would love to demo this on the Late Show.

- Installing this on my work PC was a mistake. I’m crying.

In the words of Ken Burns, I think this really is my Best Idea.

Spinning Waity Things

Agnotology

I like discovering a new word, and am excited to see this one: Agnotology. I learned it today in this profile of Stanford University researcher Robert Proctor, an agnotologist.

Very succinctly, agnotology is the study of intentionally inducing ignorance, or as people I used to work with would put it: spreading FUD.

That is, the daily work of thousands of people, employed in a large segment of corporate America. Their job it is to make sure that people do not understand something, say, like vaccines safety or climate change that might interfere with profitability. I guess if “it is difficult to get a man to understand something when his salary depends on his not understanding it” then some corollary says it should be easy for another man to help many men not understand something if his salary depends on how many other men do not understand it.

Or something.

Anyway, with so much intentionally-induced ignorance pervading our universe these days, like the dark side of the force, I was happy to see that at least the activity has a name. I wish the agnotologists well, and hope they will come up with some kind of cure or vaccine that will help us contain the stupid-industrial complex that has come to so pervade our lives and politics.

A different kind of techno-utopianism

What follows is a rather meandering meditation.

Bah, techno-utopianism

There’s a lot of techno-utopianism coming out of Silicon Valley these days. Computers will do our bidding, we will all be free to pursue lives of leisure. Machines, amplifying human talent, will make sure we are all rewarded (or not) appropriately to our skills and effort.

You already know I’m skeptical. Technology today seems to take as often as it gives: you get slick, you give up control. You get free media, you are renting out your eyeballs. A lot of people seem to express powerlessness when it comes to computing.

And why shouldn’t they? They don’t control the OS on their phone, they don’t even know exactly what an OS is. They didn’t decide how Facebook should work. If they don’t like it, they can’t do much about it, except not use it — hardly an option in this world.

A better techno-utopianism

But I am a techno utopian in my own way. In my utopia, computers (and software) become flexible, capable building blocks and people understand them enough to put recompose them for their own purposes. These blocks would be honest, real, tools, that people — programmers and non-programmers — can wield skillfully and without a sense that there is anything hidden or subtle going on underneath the hood. Basically, that we’d all be masters of our technology. I’m not saying it’s realistic, it’s just my own preferred imaginary world.

How Dave Thinks of Computers

When I started my tech career, I was an engineer in the semiconductor business. We had computer aided design (CAD) software that helped us design chips. Logic simulators could help us test digital logic circuits. Circuit simulators could help with the analog stuff. Schematic capture tools let us draw circuits symbolically. Graphic layout tools let us draw the same circuits’ physical representation. Design rule checking tools helped us make sure our circuits conformed the manufacturing requirements. The list of CAD tools went on and on. And there was a thing about CAD tools: the were generally buggy and did not interoperate worth a damn. Two tools from the same vendor might talk, but from different vendors — forget it.

So we wrote a lot of software to slurp data from here, transform it in some way, and splat it to there. It was just what you had to do to get through the day. It was the glue that made a chip design “workflow” flow.

These glue tools were not works of software engineering art. They were hacks thrown together by skilled engineerins, but not skilled software engineers in order to get something done. The results were not handsome, not shrink-wrap ready, and not user-friendly, but were perfectly workable for our own purposes.

That experience really affected the way I view computing. To this day, I see people throw up their hands because Program X simply is incompatible with Program Y; the file formats are incompatible, undocumented, secret. Similarly, people who might write “just ok” software would never dream of trying because they do not have the time or knowledge to write Good, Proper Software.

In my utopia, that barrier would mostly go away.

The real key is knowing “how computers work.”

Khan!!!!

There is a push to teach “coding” in school these days, but I see it as simultaneously too much and too little. It’s too much in that the emphasis on learning to write software is going to be lost on many people who will never use that skill, and the knowledge of one programming language or another has a ridiculously short half-life. It is not important that every high school senior needs to be able to write an OS, or even a simple program. They do not need to understand how digital logic, or microprocessors work. And teaching them the latest framework seems pointless.

But I do want them to understand what data is, how it flows through a computer, the different ways it can be structured. When they ask a computer to do something, I want them to have a good, if vague notion of how much work that “thing” is.

That is, they should understand a computer, in the same way Kirk wants Savvis to know, “why things work on a starship.”

See, Kirk doesn’t understand warp theory or how impulse engines work, but he knows how a starship “works,” and that makes him a good captain.

How things work on a computer

Which brings me back to my utopia: I want everyone to know how things are done on a computer. Because anyone who has spent any length of time around computers knows that certain patterns emerge repeatedly — and a lot of programming has a constant vague feeling of deja-vu. That makes sense, because, more or less, computers really only do a few things (these overlap a lot, too):

- reading data from one (or more places) in memory, doing something with it, and writing the results to another (or more) places in memory.

- reading data from an external resource (file, network connection, usb port) or writing it to an (file, network connection, usb port, display, etc)

- waiting for something happen, then acting

With regard to data data itself, I want people do understand basic data structural concepts:

structs, queues, lists, stacks, hashes, files — what they are and why/when they are used. They should know that they can be composited arbitrarily: structs of hashes of stacks of files containing structs, etc.

And finally, I want people to understand something of computational complexity — what computer scientists sometimes refer to as “big-O” notation. Essentially, this is all about knowing how the difficulty of solving a problem grows with the size of the problem. It applies to the time (compute cycles) and space (memory) needed to solve a problem. Mastering this is an advanced topic in CS education, which is why it is usually introduced late-ish in CS curricula. But I’m not talking about mastery. I’m talking about awareness. Bring it in early-ish, in everyone’s curriculum!

Dave’s techno-utopia

Back to my utopia. In my utopia, computers, the Internet would not be the least bit mysterious. People would have a gut-level understanding of how it works. For example, what happens when you click search in Google.

Anyone could slap together solutions to problems using building blocks that they may or may not understand individually, but whose purpose and capabilities they do understand, using the concepts mentioned above. And if they can’t or won’t do that, at least they can articulate what they want in those terms.

In Dave’s techno utopia, people would use all kinds of software: open, proprietary, big and small, that does clever and exotic things that they might never understand. But they would also know that, under the hood, that software slurps, transforms, and splats, just like every other piece of software. Moreover, they would know how to splat and slurp from it themselves, putting together “flows” that serve their purposes.

Worst environmental disaster in history?

In keeping with Betteridge’s Law: no.

My news feed is full of headlines like:

- Damage report reveals LA methane leak is one of the worst disaster in US history

- LA’s Methane Gas Leak Was One of the Biggest Environmental Disasters in US History

- California gas leak was ‘worst climate disaster in US history‘

These are not from top-tier news sources, but they’re getting attention all the same. Which is too bad, because they’re all false by any reasonable  measure. Worse, all of the above seem to deliberately misquote from a new paper published in Science. The paper does say, however:

measure. Worse, all of the above seem to deliberately misquote from a new paper published in Science. The paper does say, however:

This CH4 release is the second-largest of its kind recorded in the U.S., exceeded only by the 6 billion SCF of natural gas released in the collapse of an underground storage facility in Moss Bluff, TX in 2004, and greatly surpassing the 0.1 billion SCF of natural gas leaked from an underground storage facility near Hutchinson, KS in 2001 (25). Aliso Canyon will have by far the largest climate impact, however, as an explosion and subsequent fire during the Moss Bluff release combusted most of the leaked CH4, immediately forming CO2.

Make no doubt about it, it is a big release of methane. Equal, to the annual GHG output of 500,000 automobiles for a year.

But does that make is one of the largest environmental disasters in US history? I argue no, for a couple of reasons.

Zeroth: because of real, actual environmental disasters, some of which I’ll list below.

First: without the context of the global, continuous release of CO2, this would not affect the climate measurably. That is, by itself, it’s not a big deal.

Second: and related, there are more than 250 million cars in the US, so this is 0.2% of the GHG released by automobiles in the US annually. Maybe the automobile is the ongoing environmental disaster? (Here’s some context: The US is 15.6% of global GHG emissions, transport is 27% of that, and 35% of that is from passenger cars. By my calculations, that makes this incident about 0.0003% of global GHG emissions.)

Lets get back to some real environmental disasters? You know, like the kind that kill people, animals, and lay waste to the land and sea? Here are a list of just some pretty big man-made environmental disasters in the US:

- Leaded gasoline

- Exxon Valdez

- Gulf Oil Spill

- 1948 Donora Smog

- Rivers catching fire

- Dust bowl of the 1930’s

- Love Canal

- Libby Montana asbestos contamination

- Tennessee coal ash spill

- Pitcher, OK, mine tailing ghost town

- Texas City, Texas. Town blown up

- Gulf of Mexico Dead Zone

Of course, opening up the competition to international disasters, including US-created ones, really expands the list, but you get the picture.

All this said, it’s really too bad this happened, and it will set California back on its climate goals. I was saddened to see that SoCal Gas could not cap this well quickly, or at least figure out a way to safely flare the leaking gas.

But it’s not the greatest US environmental disaster of all time. Not close.

Privatization, aluminum sky-tube edition

This Congress still has some must-pass legislation to complete.

That includes a reauthorization bill that contains a bunch of much-needed reforms for the agency. But they slipped in a doozey of a change: complete privatization of air traffic control. The plan is to create a separate government-chartered independent non-profit to run the whole show, with the intention, of course, that it will be run much more efficiently than the  government ever could. I liked quote from an unnamed conservative groups from another Hill article:

government ever could. I liked quote from an unnamed conservative groups from another Hill article:

“To us it is an axiomatic economic principle that user-funded, user-accountable entities are far more capable of delivering innovation and timely improvements in a cost-effective manner than government agencies.”

Axiomatic, eh? Well, I think I see your problem…

Anyway, it’s worth taking a step back to think about this proposal from a few different angles. First, let’s remember what the FAA does. Really, there are three main activities:

- Write regulations

- Allocate funds for aviation-related programs (AIP and similar) and

- Run ATC (Note: the FAA’s ATC arm is called “ATO,” but I’ll keep calling it ATC here)

Honestly, there has always been something of a conflict between the needs of air traffic control with safety as top priority and efficiency and cost as lower priorities, and the rest of the organization’s needs. It is a small miracle that the FAA’s ATC runs the safest airspace in the world. But miracle or not, it is a fact.

Furthermore, it is also true that ATC has been slow to modernize. This is for several reasons. First, yes, government bureaucracy, of course. But there are other reasons, such as having congress habitually cut and delay funding for new systems (NB: when you are on temporary reauthorization, you don’t buy new things; programs do not progress. You just pay salaries.) Another problem is that the old systems, as cranky and obsolete as they are, work, and it’s just not a simple matter to replace a working system, tuned over decades with new technology, particularly if you require no degradation in performance in the process.

So does this justify privatization? Will a private organization do better in this respect? Well, here are some ideas for thought, in no particular order:

- a private organization will use fees to fund itself. This might be good, because they should be able to raise all the money they need, but then again, fees might grow without control. A private organization running ATC is essentially a monopoly. Government control is a monopoly, too — except that you can use the levers of democracy to manage it

- a fee-run organization will be mostly responsive to whomever pays the fees. In this case, it would be the airlines, and among the airlines, the majors would have the most bargaining power. Is this the best outcome? How will small carriers fare when it comes time to assign landing slots or assign routes to flight plans? How will general aviation do under such a system? Will fees designed for B747‘s coming into KEWR snuff out the C172 traffic coming into KCDW?

- Regulatory capture is a problem for any industry-regulating government entity. Does the appointment of an all-industry board of directors for a private organization that assumes most of those functions “solve” that problem making total capture a fait accompli?

- Will this new organization be self supporting or will it still depend on government money? How will it perform when there is an economic or industry slump? If there is a bankruptcy, who will foot the bill to keep the lights on?

- When the inevitable budgets shortfalls come, how will labor fare? Will they have to sacrifice their contracts in order to help save the company?

- I don’t know, but I’m just guessing, that nobody at the top of the FAA’s ATC today makes a million dollars a year. Will it be so under an private organization? If so, where might that money come from?

- Does an emphasis on efficiency server the flying public? To that matter, do the flying public’s interests diverge from those of the airlines, and if so, how are they represented in the new organization’s decision-making?

I honestly have not considered or study this matter enough to have a strong opinion, but so much of it causes the hairs on my neck to stick out.

I’ll give the authors of this new bill credit for one thing: they managed to get the ATC union (NATCA) on board, essentially by promising continuity of their contracts and protections. I’m not sure if that comes with guarantees in perpetuity. One thing I noticed immediately is that current employees would be able to pay into the federal retirement system. New employees…

[ Full disclosure: I am a general aviation pilot and do not pay user fees to use ATC, and like it that way. I do understand that this is a subsidy I enjoy. ]

tech fraud, innovation, and telling the difference

I admit it. I’m something of a connoisseur of fraud, particularly technology fraud.I’m fascinated by it. That’s why I have not been able to keep my eyes off the unfolding story of Thernos. Theranos was a company formed to do blood testing with minute quantities of blood. The founder, who dropped out of Stanford to pursue her idea, imagined blood testing kiosks in every drugstore, making testing ubiquitous, cheap, safe, painless. It all sounds pretty great in concept, but it seemed to me from the very start to lack an important hallmark of seriousness: evidence of a thoughtful survey of “why hasn’t this happened already?”

There were plenty of warning signs that this would not work out, but I think what’s fascinating to me is that the very same things that set off klaxons in my brain lured in many investors. For example, the founder dropped out of school, so had “commitment,” but no technical background in the art she was promising to upend. Furthermore, there were very few medical or testing professionals among her directors. (There was one more thing that did it for me: the founder liked to ape the presentation style and even fashion style of Steve Jobs. Again, there were people with money who got lured by that … how? The mind boggles.)

Anyway, there is, today, a strange current of anti-expert philosophy floating around Silicon Valley. I don’t know what to make of it. They do have some points. It is true that expertise can blind you to new ideas. And it’s also true that a lot of people who claim to be experts are really just walking sacks of rules-of-thumb and myths accreted over unremarkable careers.

At the same time, building truly innovative technology products is especially hard. I’m not talking about applying technology to hailing a cab. I’m talking about creating new technology. The base on which you are innovating is large and complex. The odds that you can add something meaningful to it through some googling seems vanishingly small.

But it is probably non-zero, too. Which means that we will always have stories of the iconoclast going against the grain to make something great. But are those stories explanatory? Do they tell us about how innovation works? Are they about exceptions or rules? Should we mimic successful people who defy experts, by defying experts ourselves, and if we do, what are our chances of success? And should we even try to acquire expertise ourselves?

All of this brings me to one of my favorite frauds in progress: Ubeam. This is a startup that wants to charge your cell phone, while it’s in your pocket, by means of ultrasound — and it raised my eyebrows the moment I heard about it. They haven’t raised quite as much money as did Theranos, but their technology is even less likely to work. (There are a lot of reasons, but they boil down to the massive attenuation of ultrasound in air, the danger of exposing people to high levels of ultrasound, the massive energy loss from sending out sound over a wide area, only to be received over a small one [or the difficulty and danger of forming a tight beam], the difficulty of penetrating clothes, purses, and phone holders, and the very low likelihood that a phone’s ultrasound transducer will be positioned close to normally with respect to the beam source.) And if they somehow manage to make it work, it’s still a terrible idea, as it will be grotesquely inefficient.

What I find so fascinating about this startup is that the founder is ADAMANT that people who do not believe it will work are just trapped in an old paradigm. They are incapable of innovation — broken, in a way. She actively campaigns for “knowledge by Google” and against expertise.

As an engineer by training and genetic predisposition, this TEDx talk really blows my mind. I still cannot quite process it:

Lies, damn lies, and energy efficiency

This afternoon I was removing a dead CFL from a fixture in the kitchen when it broke in my hand, sending mercury-tainted glass towards my face and the floor. Our kitchen had been remodeled by the previous owner before he put the house up for sale, and he brought up to compliance with California Title 24 requirements for lighting, which at the time could only have been met with CFL fixtures that used bulb bases incompatible with the ubiquitous “edison base” used by incandescent bulbs — after all, with regular bases, what would stop someone from replacing the CFLs with awful incandescents?

I’ve never liked the setup, and part of the reason is the many compromises that come with CFLs. Though they have certainly saved energy, they’ve been a failure on several other levels. First, they are expensive, between $8-14 in my experience. (These are G24 based bulbs, not the cheap edison-compatible retrofits) Furthermore, they fail. A lot. We’ve lived in this house since 2012 and our kitchen has six overhead cans. I’ve probably replaced 7 or 8 bulbs in that time. Finally, with the fancy CFL-only bases come electro nic ballasts, built into the fixture. One has failed already and it can only be replaced from the attic. I hate going up there, so I haven’t done it even though it happened six months ago. The ballasts also stop me from putting in LED retrofits. I’ll have to remove them all first.

nic ballasts, built into the fixture. One has failed already and it can only be replaced from the attic. I hate going up there, so I haven’t done it even though it happened six months ago. The ballasts also stop me from putting in LED retrofits. I’ll have to remove them all first.

The thing is, only a few years ago, it seemed like every environmentalist and energy efficiency expert was telling us (often in pleading, patronizing tones) to switch to CFLs. They cost a bit more, but based on energy savings and longer life, they’d be cheaper in the long run. But it turns out that just wasn’t true. It was theoretically true but practically not. Unfortunately, this is not uncommon in the efficiency world.

There were other drawbacks to CFLs. They did not fit lamps that many people had. The color of their light was irksome. There was flicker that some people could detect. When they broke, they released mercury into your home, that, if it fell into carpet or crevices in the floor, would be their essentially forever. Most could not dim, and those that could have laughably limited dimming range.Finally, they promised longevity, but largely failed to deliver.

Basically, for some reason, experts could not see what became plainly obvious to Joe Homeowner: CFLs kinda suck.

So, was pushing them a good idea, perhaps based on what was known at the time?

I would argue no. This was a case of promoting a product that solved a commons problem (environmental impact of energy waste) with something whose private experience was worse in almost every way possible. Even the economics, touted as a benefit, failed to materialize in most cases.

I would argue that the rejection (and even political backlash) against CFLs was entirely predictable, because 1) over promising benefits 2) downplaying drawbacks and 3) adding regulation does not make people happy. What does make people happy is a better product.

So far, it looks like LEDs are the better product we need. They actually are better than incandescent bulbs in most ways, and their cost is coming down. I’m sure their quality will come down, too, as manufacturers explore the price/performance/reliability frontier, and we may end up throwing away more LED fixtures than any environmentalist could imagine. Not so far; things are holding. They’re a lighting efficiency success, perhaps the first since incandescent bulbs replaced gas and lamp oil.

The lesson for energy efficiency advocates, I think, is:

- UNDERpromise and OVERdeliver

- do not try to convince people that an inferior experience is superior. Sure, some will drink the Kool-Aid, but most won’t. Consider what you’re asking of people.

- Do not push a technology before its time

- Do not push a technology after its time (who’s days are numbered)

Simpler times, news edition

The other evening, I was relaxing in my special coffin filled with semiconductors salvaged from 1980’s-era consumer electronics, when I was thinking about how tired I am of hearing about a certain self-funded presidential candidate, or guns, or terrorism … and my mind wondered to simpler times. Not simpler times without fascists and an easily manipulated populace, but simpler times where you could more easily avoid pointless and dumb news, while still getting normal news.

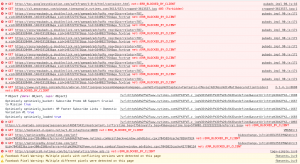

It wasn’t long ago that I read news, or at least “social media” on Usenet, a system for posting on message boards that predates the web and even the Internet. My favorite “news reader” (software for reading Usenet) was called trn. I learned all it’s clever single-key commands and mastered a feature common to most serious news readers: the kill file.

Kill files are conceptually simple. They contain a list of rules, usually specified as regular expressions, that determine which posts on the message board you will see. Usenet was the wild west, and it always had a lot of garbage on it, so this was a useful feature. Someone is being rude, or making ridiculous, illogical arguments? <plonk> Into the kill file goes their name. Enough hearing about terrorism? <plonk> All such discussions disappear.

Serious users of Usenet maintained carefully curated kill files, and the result was generally a pleasurable reading experience.

Of course, technology moves on. Most people don’t use text-based news readers anymore, and Facebook is the de-facto replacement for Usenet. And in fact, Facebook is doing curation of our news feed – we just don’t know what it is they’re doing.

All of which brings me to musing about why Facebook doesn’t support kill files, or any sophisticated system for controlling the content you see. We live in more advanced times, so we should have more advanced software, right?

More advanced, almost certainly, but better for you? Maybe not. trn ran on your computer, and the authors (its open source) had no pecuniary interest in your behavior. Facebook, of course, is a media company, not a software company, and in any case, you are not the customer. The actual customers do not want you to have kill files, so you don’t.

Though I enjoy a good Facebook bash more than most people, I must also admit that Usenet died under a pile of its own garbage content. It was an open system and, after, a gajillion automated spam posts, even aggressive kill files could not keep up. Most users abandoned it. Perhaps if there had been someone with a pecuniary interest in making it “work,” things would have been different. Also, if it could had better support for cat pictures.